3 minute read

What an effective data ingestion framework looks like

Enterprises are engulfed by data. To store and manage it effectively, you need a data ingestion framework. Here's what that looks like.

Table of contents

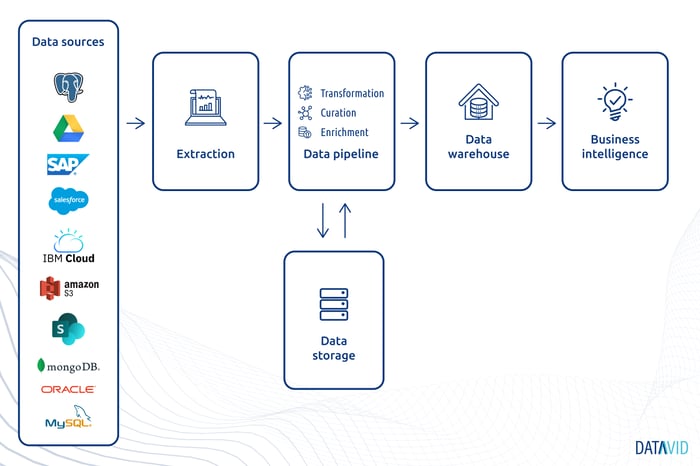

The data ingestion framework is largely defined by the data processing requirements of an enterprise. Broadly, the data ingestion framework includes data integration software, data repositories, and data processing tools.

Data is the way of the future and there is true value in every interaction that a user has either with the internet or with business.

Social media has shown how massive troves of data can be managed and controlled.

To collect all of that data and make sense of it, companies must consider creating effective data ingestion framework.

The modern data ingestion framework

Data ingestion framework is the process of extracting and loading data from various sources to data integration software, data repository, and data processing tool.

The framework design will be hugely dependent on the data sources, data formats, speed at which data arrives, and the business outcome desired to be achieved.

Data ingestion framework constituents:

To make the data available more valuable, organisations should consider the following while designing the framework:

- Data integration software – The data integration tools convert compiled data into actionable information for the company by simplifying the mass of numbers included in the data and smoothening them out into more digestible pieces.

- Data repositories (Data silos) – The locations within a technology system where the actual data is stored.

- Data processing tools – These tools sort our pieces of relevant data from the non-relevant enhancing the trustworthiness and manageability of the data.

Each of these pieces serves an important role in how data is stored, accessed, and used.

Any organization that has access to a large amount of data needs to look at how its data ingestion framework is actively set up to see if there is more that it could be doing to use the data as effectively as possible.

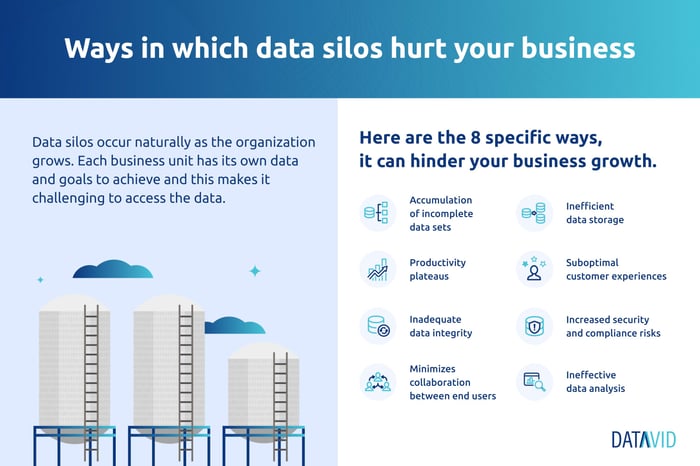

The spotlight is on data silos

It is important to look at data silos for what they are while designing your data ingestion framework.

Clicdata.com provides us with a clear-cut definition of what a data silo is just to make it very clear:

A data silo, also known as an information silo, is a repository of information in an organization’s department that remains under the control of the said department but is isolated from the rest of the organisation.

Data silos are generally naturally occurring in businesses of all types.

As those organisations grow larger, data silos prove to be a stumbling block if your goal is to share as much data and information across your team as you possibly can.

Problems data silos cause

A few of the issues with data silos are as follows:

- Technology Problems – Data silos cause a slowdown in the transfer of pieces of information from one department to another by getting trapped in one specific area of the organisation.

- Damaging to Workplace Culture: Data silos disrupt the culture of collaboration within enterprises by creating barriers between departments that are unwilling to share the data they hold.

- Hindrance to Growth: Data silos result in certain departments lacking the data and information that they need to grow as effectively as they otherwise would have.

The destruction of data silos, looking at the disadvantages, becomes the top priority for business owners.

The only upside of data silos is that they can potentially insulate certain pieces of data from becoming corrupted in the event that there is a massive data breach.

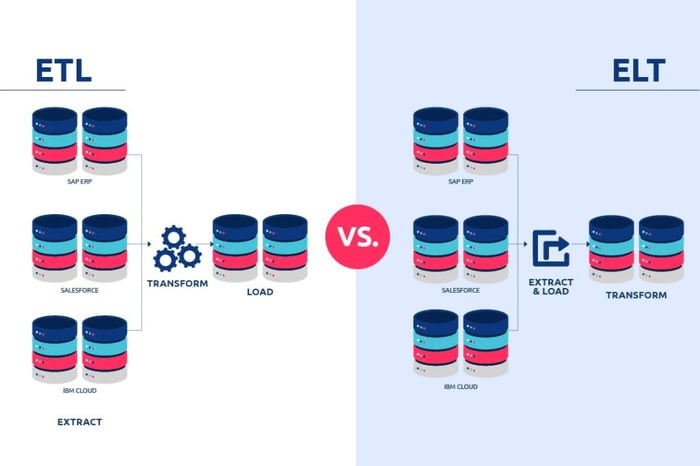

ETL vs ELT

ETL (Extract, Transform, Load), and ELT (Extract, Load, Transform) are two different approaches to getting data from Point A to Point B.

ELT is faster compared to ETL, and it is loaded directly into the destination system.

In ETL, the transformation of the extracted data happens first before loading it into the target system.

ELT is less tried than the ETL method, and it proves to be the ideal way to perform data transfers in our modern world.

The maintenance workload is lessened with ELT which is great for organisations that do not have a sprawling IT infrastructure, and yet it still gets the job done.

Getting started with the data ingestion framework

Data velocity, data complexity, customer ask, regulation, compliance needs, and the goal to be achieved should be considered before you start designing the framework.

A data ingestion tool like Datavid Rover can help in simplifying the entire process with its built-in connectors allowing data ingestion rapidly and securely from any source.

Datavid Rover takes into consideration the entire enterprise data lifecycle into account removing overhead costs and increasing compliance.

Frequently Asked Questions

What is a data ingestion framework?

Data ingestion framework is the process of extracting and loading data from various sources to data integration software, data repository, and data processing tool.

What is a data processing tool?

A data processing tool is a tool that transforms the collected data into usable information.

What are the main components of a data ingestion framework?

The data ingestion framework constitutes data integration software, a data processing tool, and a data repository.