3 minute read

How to handle unstructured data in big data effectively

Unstructured data is all around us, but how do you make sense of it? Learn about how big data solutions aim to solve this problem.

Table of contents

Unstructured data is raw, unorganized information that is hard to retrieve or analyze without knowing its exact location; one of the key problems that big data solutions aim to solve at scale since the late 2000s.

Most data out there is unstructured:

- The contents of a Word file sitting in a folder on your PC

- Photos (and their metadata) stored in an SD card

- Messages sent via a private enterprise network

- Session data from a person visiting a website

All of this information sits isolated in its raw form and originally intended location, without being “structured” in a way that allows retrieval or analysis at scale.

As a concept, big data caught a lot of attention because of the promising capabilities for machines to give unstructured data more meaning, thus making it more useful.

The relationship between unstructured data & big data

The term “big data” was coined to tackle a problem; an increasing volume of unstructured data being generated at a rate that was impossible to tackle by humans.

Traditional ways of structuring data to give it meaning required a lot of work sourcing it, designing relational databases, and properly formatting it to fit certain rules.

When information goes through this process of being “centralised” into a form that is consistent and understandable by humans, it becomes structured.

When there is too much of this unstructured data scattered across sources, you can only resort to big data concepts such as data lakes or warehouses to analyse it properly.

The modern understanding of unstructured data

The internet is the primary reason why the volume of unstructured data being generated became basically impossible for organisations to analyse in the late 2000s.

Suddenly you had millions of people shifting their focus to pushing out public data in seemingly random ways, and it was hard to give all of it a structure.

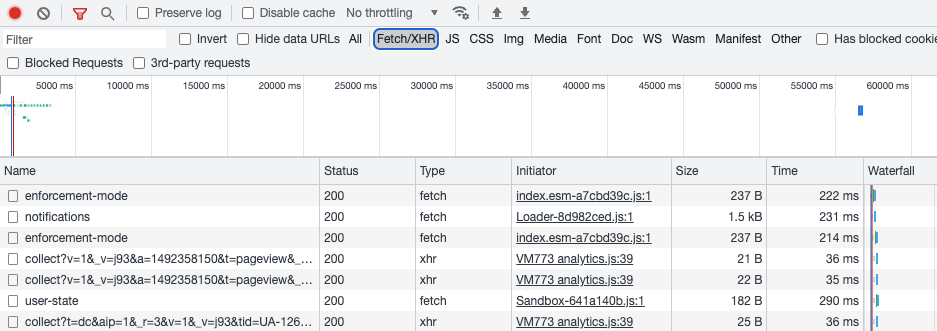

Note that unstructured data isn’t just something you create as a human; it can also be machine-generated, such as session information when visiting a website.

This information was (and still is) extremely valuable to organisations as they tried to understand the behaviour of people buying products or services online.

A question often came up then:

“How do you analyse all of this data?”

The answer was—you don’t.

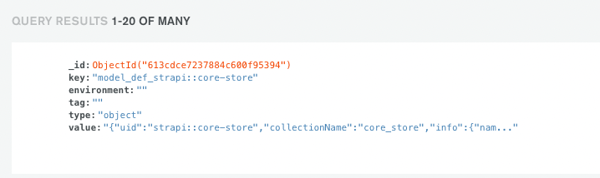

You simply store it for the time being and try to make sense of it later on. This is when non-relational (i.e. NoSQL) databases such as ScyllaDB started becoming popular:

- Their performance was superior for most operations

- They didn’t require you to define a schema beforehand

- You could store massive amounts of unstructured data in them

This all meant that NoSQL databases were better suited at handling unstructured data than traditional relational DBs, leading to big data as the solution to process and analyse it.

Note: The problem of too much unstructured data being generated also led to the development of “multi-model” databases such as MarkLogic, which can store information in multiple data models such as document, graph, binary, and relational SQL.

How big data tackles the problem of unstructured data

You can think of massive amounts of unstructured data as the problem to solve and big data as the set of concepts, tools, and best practices to actually solve it.

“Big data” is neither a specific technology nor a ready-made solution. The term itself refers to the computational analysis of extremely large data sets with the aim of surfacing trends.

It’s a huge field spanning data sourcing and linking, machine learning algorithms, non-relational databases, data analysis, human interfaces, and more.

“Big data is neither a specific technology nor a ready-made solution; it’s a set of best practices for the computational analysis of large data sets.“

The ultimate value of employing big data practices is for organisations to uncover business patterns that would have otherwise been impossible to analyse manually.

This includes surfacing internal data for big enterprise companies with decades of information scattered across multiple systems and departments.

Some example industries that benefit greatly from this are:

- Publishing, where text mining allows uncovering patterns and trends, makes the process of deciding which new stories to write more impactful.

- Finance, where compliance is extremely important, makes intelligent automation based on previous data is a strong solution for faster compliance checks.

- Life sciences, where millions of research papers tend to sit in countless data sources, make it hard to retrieve the right information without a unified search.

These are just 3 of the many areas that can benefit from applying big data workflows to unstructured information, giving your enterprise an edge in the market.

Give structure to your enterprise data

Most enterprises today deal with the problem of competitors commoditising large amounts of valuable data for end-user consumption, yet dealing with unstructured data is still hard.

Learning the proper practices and hiring the right talent to drive your big data efforts forward in a world where great developers are scarce is an unfortunate truth.

That’s why Datavid is committed to making the best of each piece of data available to your enterprise by hiring and continuously training engineers in big data best practices.

If your organisation suffers from millions of documents scattered across multiple systems, slow discovery times, and duplicate studies, it is time to bring structure to your data.

Frequently Asked Questions

What is considered unstructured data?

Unstructured data is raw, unorganised information that is hard to retrieve or analyse without knowing its exact location. It can be either human- or machine-generated.

How does big data handle unstructured data?

Big data is a set of concepts and practices that aim to surface patterns and trends through the computational analysis of extremely large unstructured data sets. Big data practitioners handle unstructured data by mining it for common patterns and labeling it accordingly.