3 minute read

Top 5 data ingestion best practices to implement

Here are 5 data ingestion best practices for creating a robust pipeline to move data from multiple sources to a single location.

Table of contents

The 5 best practices for a proper data ingestion pipeline:

- Identifying your data sources

- Leveraging Extract, Load, Transform

- Evaluating technology (Kafka, Wavefront, etc)

- Choosing the right cloud provider

- Asking for help early

Learn more details in our blog post.

A data ingestion pipeline is required to port data from multiple sources and multiple business units.

It transports data from assorted sources to a data storage medium where it is accessed, used, and analyzed.

It is a base for the other process of data analytics. Organizing the data ingestion pipeline is a key strategy while transitioning to a data lake solution.

5 best practices to design a data ingestion pipeline

Let’s look at the best practices to consider while creating a data ingestion pipeline.

Practice #1: Identifying data sources

Data teams increasingly load data from different business units, third-party data sources, and unstructured data into a data lake.

This data could be driven from different sources and in different formats-structured, unstructured, and streaming.

Structured data is the output from ERP & CRM and is already presented in the database in structures like columns and data types.

Unstructured data sources include regular text files, video, audio, and documents; metadata information is required to maintain them. Streaming data comes from sensors, machines, IoT devices, and multimedia broadcasts.

Practice #2: Leveraging ELT

ELT (Extraction, Load, Transform) is a process of extracting data from multiple sources, loading them into a common data point, and performing the transformation depending on the task.

ELT comprises 3 different sub-processes:

- Extraction: Data is exported from source to staging, not directly to the warehouse, to prevent corruption.

- Load: Extracted data is moved from the staging area to the data warehouse.

- Transformation: Post-loading, required transformations are performed once, such as filtering, validating, etc.

Practice #3: Evaluating the best available technology

With a plethora of tools and technologies available, you need to analyze which one is the most suitable according to your needs.

Helpful characteristics to look out for in a tool are:

- Time-effective in terms of delivery and rapid extraction

- Simplified user interface

- Scalability

- Cost-effectiveness

- Security

Here is a list of tools that can handle large amounts of data.

- Apache Kafka: Kafka is an open-source application that allows you to store, read, and analyse data streams free of cost. Kafka is distributed, which means that it can run as a cluster spanning multiple servers.

- Apache NIFI: A real-time open-source data ingestion platform built on NiagraFiles technology is designed to manage data transfer between different sources and destination systems.

- Wavefront: It offers features such as big data intelligence, enterprise application integration, data quality, and master data management.

Practice #4: Availing a certified cloud provider services

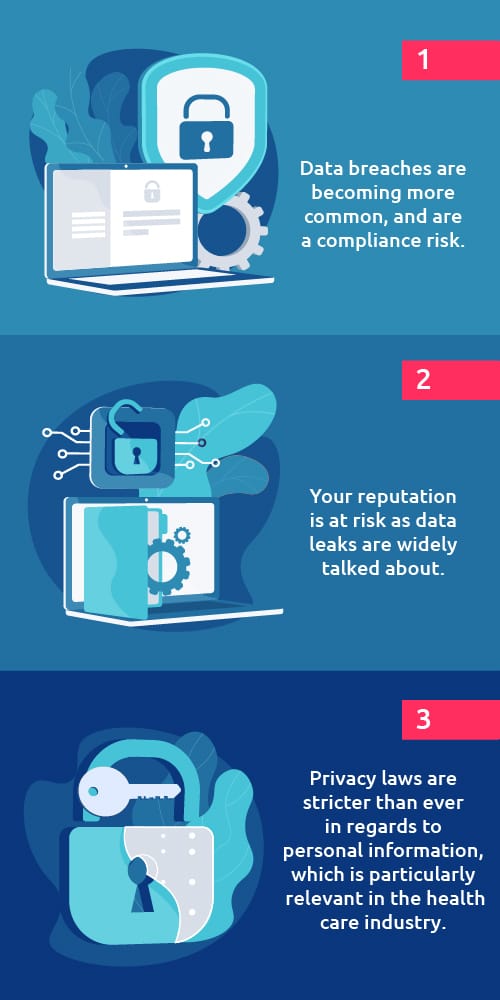

It’s essential to choose a proven and certified cloud provider which can handle the volume and provide secure storage, which remains a major concern.

Large-scale data breaches are becoming more common, making businesses vulnerable to losing sensitive data.

Zero-knowledge encryption, multi-factor authentication, and privacy are some of the factors that must be considered while selecting a cloud provider.

Practice #5: Asking for help early

Data ingestion is a complex process, and every pipeline point is important and dependent. It’s recommended that you seek help from experts before ingesting data.

You need to be conscious while implementing, as it’s easy to get stuck. Reach out to an expert or seek consultation as early as possible because a little delay or mistake can corrupt the whole system.

Deriving maximum value from your data ingestion pipeline

Creating an efficient data ingestion pipeline is a complex process. The biggest hurdle enterprises face while creating a data ingestion pipeline is data silos.

Datavid Rover solves this fundamental problem by providing out-of-the-box data ingestion for common enterprise data sources like SAP ERP, Microsoft Dynamics, etc.

Our team of expert consultants can help you set up your data hub with built-in connectors or build completely new ones for your specific use case.

Frequently Asked Questions

How does the data pipeline work?

A data pipeline comprises a set of steps to move data from multiple sources to a single destination.

What are the best practices to follow while designing a data ingestion pipeline?

Identifying data sources, leveraging ELT, deploying data ingestion tools, using the services of a certified cloud provider, and seeking recommendations early in the process of designing a data ingestion pipeline are the best practices.

What are the key elements of a data pipeline?

Data source, data process steps, and data destination are the key elements of a data ingestion pipeline.